Before we start to grapple with psychoacoustics, it is useful to know a little about how human hearing works. Figure 1 shows a diagram of the main components. Sound waves from the outside world enter through the external part of the ear, into the auditory canal. This is an acoustic duct, which functions rather like an ear trumpet to concentrate the energy of the sound wave before it reaches the tympanic membrane, or eardrum. The sound level at the eardrum is boosted by up to 15 dB compared to the incident sound wave, peaking around 3 kHz. The eardrum is a membrane that is set into vibration by the incoming sound wave. The next part of the system is the middle ear, containing three tiny bones (the malleus, incus and stapes) which act as a kind of lever system to increase the force available from the vibration.

Now we enter the most important region, the inner ear. The complicated shape drawn in purple indicates a cavity within the bone of your skull. It has two main components. The semi-circular canals are associated with our sense of orientation and balance: it has components which behave rather like the accelerometers and gyros that you may have inside your mobile phone, to sense accelerations and rotational movement.

The component we are most interested in is the second one, the cochlea. It is wound up into a shape like a snail shell: indeed, the word “cochlea” derives from the Greek for a snail. The cochlea is a bony cavity, and it is filled with fluid. It has two “windows”: flexible membranes that hold the fluid in, but able to bulge in and out . The final bone of the chain in the middle ear, the stapes, presses against one of these windows, the oval window, and it pushes it in and out as a result of the incoming sound wave. The fluid inside the cochlea is virtually incompressible, so to accommodate this movement there is a second window, the round window, which can bulge in the opposite sense to the oval window.

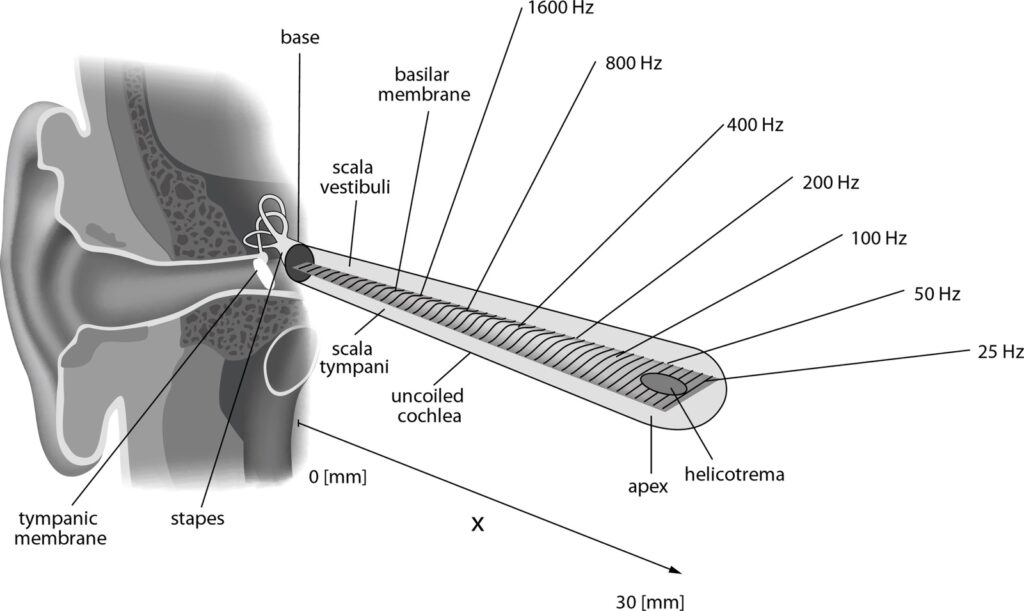

To see the point of this, we need to know a bit about what is inside the cochlea. Figure 2 shows an “unrolled” view of it. Glossing over a lot of complicated anatomical details (see Wikipedia if you want to know more), the crucial component for our purpose is called the basilar membrane. This membrane bridges across the cochlea from side to side. The oval window introduces acoustic disturbances on one side of it, while the round window allows them to “escape” on the other side. The resulting fluid flow in the cavity exerts a force across the basilar membrane, making it vibrate.

Now for the important part: the mechanical properties of the basilar membrane vary, by an enormous factor, along the length of the cochlea. At the end near the two windows, it is narrow and stiff, while at the far end it is wider and floppier. The result is that the basilar membrane functions as a kind of mechanical frequency analyser. If the incoming sound wave is sinusoidal, the vibration response of the basilar membrane is concentrated in a particular region: near the base for a high frequency, near the far end for a low frequency. Some positions of maximum response for different frequencies are indicated in Fig. 2 (but note that more recent research suggests that the lowest indicated frequency may be a bit misleading: the peak frequency at the end of the cochlea may be 50 Hz).

The basilar membrane carries a large number of hair cells, which are the neural sensors for vibration. So, at least to an extent, the information reaching the brain along the auditory nerve is already sorted out into its frequency content, simply because nerve fibres originating from hair cells attached at different positions on the basilar membrane will tend to respond most strongly to sounds in different frequency ranges, appropriate to the position of each one. We will see in section 6.4 that some aspects of this mechanical filtering action of the basilar membrane carry over rather directly into the way we hear.

When you listen to sound that is sufficiently quiet, this mechanical response of eardrum, middle ear and basilar membrane involves small-amplitude vibration, and can be described reasonably well by the kind of linear theory we have been using up to now. But the sound does not need to become very loud before the response starts to exhibit progressive nonlinearity. In any case, from here on the process of hearing is very definitely nonlinear. The nervous system communicates in the form of electrical pulses, so the brain is essentially a digital device. The information from individual hair cells is coded, somehow, in the rate and detailed timing of the pulses generated and sent off along the auditory nerve by the neurons connected to them.

However, this is not the end of mechanical effects that influence hearing. Not all the hair cells are sensors. Some of them, indeed the majority of them, behave like loudspeakers: they cause additional motion of the basilar membrane in response to nerve signals coming back from the brain or from a more local reflex action. These active hair cells are known as outer hair cells, whereas the sensing hair cells are inner hair cells. Sometimes, the action of the outer hair cells results in sound coming out of the ears: so-called otoacoustic emissions. Some kinds of tinnitus are the result of real sounds generated in the ear in this way. The details of the excitation of the basilar membrane by these active hair cells are still a matter of current research, but it appears that this process is crucial to the phenomenal range of loudness that our ears are capable to responding to.

For more detail of all aspects of cochlear mechanics, see the comprehensive review by Ni et al. [1].

There is one more thing we need to mention about ears and hearing. So far, we have talked about how one single ear works, but of course we have two ears. It is by combining the information from them that we are able to tell the direction a sound is coming from — at least up to a point. Figure 3 shows in schematic form the head of a listener. The lower part of the figure shows a top view of the head, with a sound wave passing by in the direction of the red arrow.

We can see that the sound wave interacts with the listener’s ears in slightly different ways, and these give two possible clues to the direction the sound has come from. First, there is a timing difference: the sound wave reaches one ear before the other. If the sound is a continuous signal like a sine wave, this will produce a phase difference between the signals at the two ears. On the other hand if the sound contains transient events like clicks, the listener’s brain may be able to detect the time difference directly by comparing the signals from the two ears.

The second clue comes from loudness. In the case shown in Fig. 3 the sound reaches the listener’s right ear directly, but in order to reach the left ear it has to get round the head. There will be some kind of sound shadow behind the head, so that this left-ear signal will be less loud. The loudness difference will depend on the Helmholtz number associated with the size of the head and the wavelength of the sound, as was discussed in section 4.1 (see Fig. 8 there).

Our brains make use of both these signals to estimate the direction a sound is coming from. The details are, as ever, complicated: see the book by Brian Moore [2] for more information. One consequence of the use of loudness and timing differences between the ears is a curious but familiar experience when using headphones. If the sound is the same in both ears (a monaural recording), then obviously both the timing and the loudness are exactly the same in both ears. Your brain then makes the logical deduction that the sound source must be inside your head, and that is the perception you will usually have.

There is one more important twist in the way we localise a source of sound. We are very often listening to sounds within a room, whether that is a domestic room or a concert hall. This means that you hear each sound many times over: the direct sound from the source is followed by echoes from the walls, the ceiling, or the furniture. Our brains have evolved to cope with this potential confusion of direction — presumably not because of our need to hear music clearly, but because of an evolutionary need in the distant past to know where a predator is approaching from (echoes can come from trees or rocks, as well as from the walls of a room).

At least for echoes that follow the direct sound within a short time (about 50–100 ms), our brains recognise that the later arrivals are just further copies of the same sound, and they are fused together in your perception. You hear the sound as coming from the direction of the first arrival, which is the direct sound path. The later echoes add to the loudness, and perhaps the clarity, of the perception, but you are not explicitly aware of them as separate echoes. This effect was first described by Lothar Cremer, who we will meet in chapter 9 because he also worked on how violin strings vibrate. Cremer called it the “law of the first wavefront”; but these days it is more commonly called the “precedence effect”. To see more about it, go to this Wikipedia page.

One immediate application of the precedence effect is in the design of sound reinforcement systems for lecture halls, churches and so on. There will usually be a number of loudspeakers spread around the space. If the sound to each loudspeaker is delayed by an amount corresponding to the travel time of sound from the microphone, they will behave like echoes. Listeners should then hear the sound as if it came from the microphone position, enhanced in loudness and clarity by the reinforcement.

Echoes that arrive after about 50–100 ms are too late for the precedence effect. They are not fused into the single perception, and instead contribute to that sense of reverberation which is a particularly familiar quality of sound in large spaces like cathedrals. If a single strong reflection arrives after this time, it may be heard as a discrete echo — and then you are able to determine the direction it has come from.

The precedence effect relies to a considerable degree on our ability to discriminate an early echo as coming from a different direction to the direct sound. This ability in turn relies on having information from both ears. A consequence is that if you remove this extra information by listening to a monaural recording or by listening through a keyhole, the echoes seem more more prominent. The impression is that the sound is more reverberant, and details are less clear. This is one reason why recording studios are usually very “dry” spaces, with a lot of sound absorption and little reverberation. A recording made in a less dead space is likely to sound unexpectedly reverberant when it is replayed through a loudspeaker, because the information about the direction of early echoes has been lost.

[1] Guangjian Ni, Stephen J. Elliott, Mohammad Ayat, and Paul D. Teal: “Modelling cochlear mechanics”, BioMed Research International, Volume 2014, Article ID 150637, 42 pages http://dx.doi.org/10.1155/2014/150637

[2] Brian C. J. Moore; “An Introduction to the Psychology of Hearing”, Academic Press (6th edition 2013).